GLM-4.6: Z.ai's Revolutionary 357B Parameter Model Takes on DeepSeek and Claude

In the rapidly evolving landscape of large language models, a new contender has emerged that's making waves across the AI community. Z.ai's GLM-4.6, with its massive 357 billion parameters, represents a significant leap forward in AI capabilities, positioning itself as a formidable competitor to established giants like DeepSeek-V3.1-Terminus and Claude Sonnet 4.

What Makes GLM-4.6 Special?

GLM-4.6 isn't just another incremental update—it's a comprehensive enhancement over its predecessor, GLM-4.5, with several groundbreaking improvements that address key limitations in current AI models.

Extended Context Window: 200K Tokens

One of the most impressive upgrades is the expansion of the context window from 128K to 200K tokens. This 56% increase enables the model to handle significantly more complex agentic tasks and process longer documents without losing coherence. For developers and researchers working with extensive documentation or multi-step reasoning tasks, this expanded context window could be a game-changer.

Superior Coding Performance

GLM-4.6 demonstrates remarkable improvements in coding capabilities, achieving higher scores on code benchmarks and showing enhanced real-world performance in applications like Claude Code, Cline, Roo Code, and Kilo Code. The model particularly excels at generating visually polished front-end pages, making it an attractive option for web developers and UI/UX designers.

Advanced Reasoning and Tool Integration

The model showcases clear improvements in reasoning performance and supports tool use during inference, leading to stronger overall capability. This makes GLM-4.6 particularly well-suited for complex problem-solving scenarios where multiple tools and reasoning steps are required.

Enhanced Agent Capabilities

GLM-4.6 exhibits stronger performance in tool-using and search-based agents, integrating more effectively within agent frameworks. This positions it as an ideal choice for building sophisticated AI assistants that can navigate complex workflows and utilize external tools seamlessly.

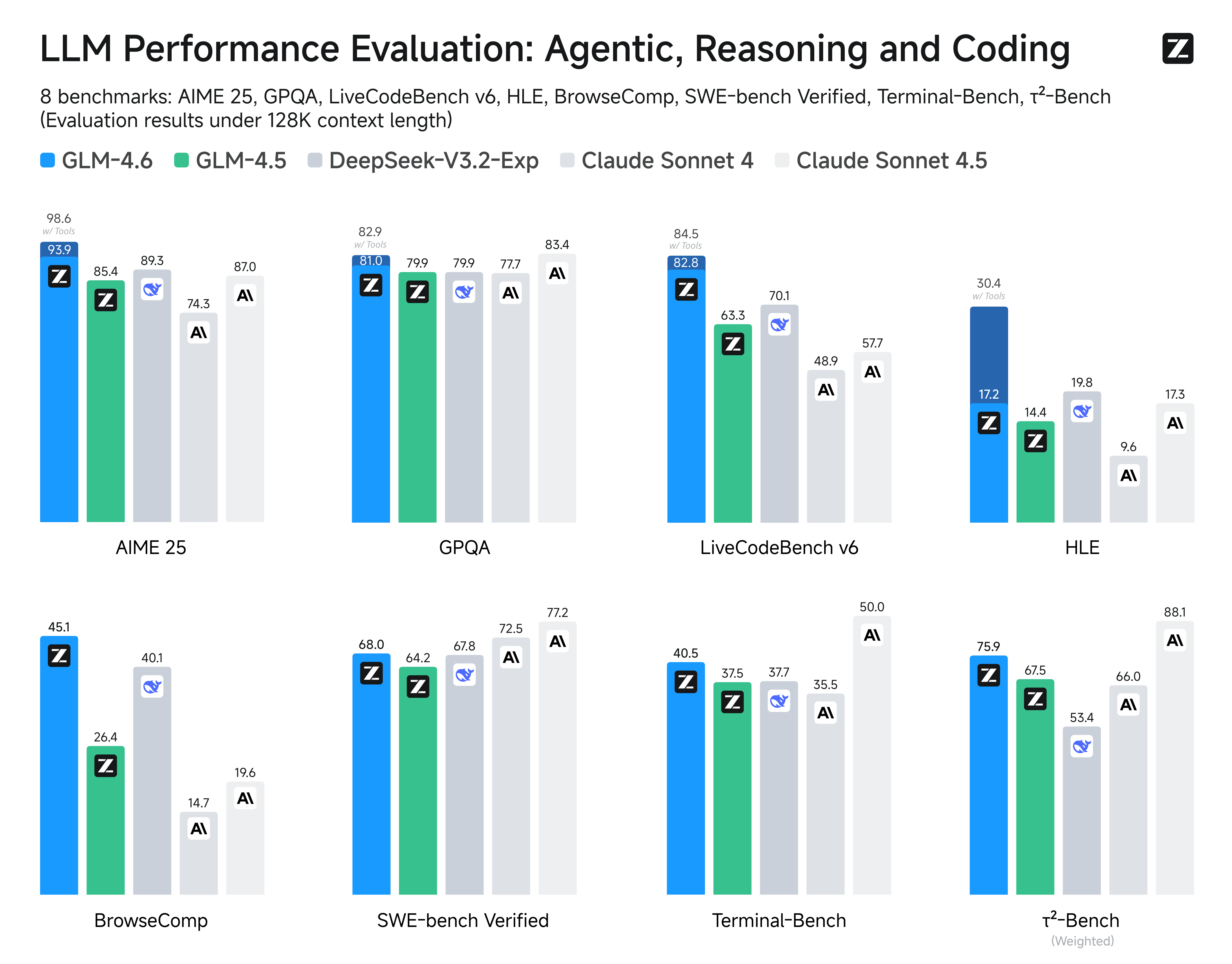

Benchmark Performance: Competing with the Best

Z.ai has evaluated GLM-4.6 across eight public benchmarks covering agents, reasoning, and coding domains. The results demonstrate clear gains over GLM-4.5, with GLM-4.6 holding competitive advantages over leading domestic and international models.

Technical Specifications and Availability

- Model Size: 357B parameters

- Tensor Types: BF16, F32

- License: MIT

- Technical Report: arXiv:2508.06471

- Available Formats: Safetensors

Getting Started with GLM-4.6

Access Options

- Z.ai API Platform: Access GLM-4.6 through Z.ai's official API services at docs.z.ai/guides/llm/glm-4.6

- Direct Chat Interface: Try GLM-4.6 instantly at chat.z.ai

- Hugging Face: Available on the Hugging Face platform for local deployment

Recommended Configuration

For general evaluations, Z.ai recommends using a sampling temperature of 1.0. For code-related tasks, they suggest:

top_p = 0.95top_k = 40

Community and Resources

Z.ai has built a robust ecosystem around GLM-4.6:

- Discord Community: Join the active community at discord.gg/QR7SARHRxK

- Technical Documentation: Comprehensive guides available on Zhipu AI's technical documentation platform

- GitHub Repository: Detailed implementation examples and resources at github.com/zai-org/GLM-4.5

Why GLM-4.6 Matters for Developers

For AI Application Developers

The extended context window and enhanced reasoning capabilities make GLM-4.6 particularly valuable for:

- Complex document analysis and summarization

- Multi-step problem solving

- Advanced code generation and debugging

- Sophisticated agent development

For Researchers and Enterprises

The model's competitive performance against established players like DeepSeek and Claude, combined with its MIT license, makes it an attractive option for both academic research and commercial applications.

Integration with ChatFrame

For users of ChatFrame, GLM-4.6 represents another powerful option in the growing ecosystem of supported models. ChatFrame's unified interface makes it easy to compare GLM-4.6's performance against other leading models and leverage its unique capabilities for specific use cases.

The Future of Open AI Models

GLM-4.6's release signals a significant shift in the AI landscape, demonstrating that open models can compete with and even surpass proprietary solutions in certain domains. With its impressive technical specifications and competitive benchmark performance, GLM-4.6 is poised to become a go-to choice for developers and researchers seeking powerful, accessible AI capabilities.

Getting Involved

The GLM-4.6 ecosystem is rapidly growing, with 57 spaces already using the model on Hugging Face and an active community of developers and researchers. Whether you're looking to integrate it into your applications, contribute to its development, or simply explore its capabilities, now is an excellent time to get involved with this cutting-edge AI model.

Ready to experience the power of GLM-4.6? Try it today through Z.ai's platform or integrate it into your ChatFrame workflow to see how it compares to other leading models in real-world applications.